Reference-based metrics

In general, generative AI produces outputs where there are many diverse inferences that are all equally valid (ex: there are many possible responses to "tell me a story about a dragon" which would be valid but differ wildly). However, for other use-cases the correct answer can be known with a high degree of accuracy. As an example, if the prompt was "Which of these colors is in the middle of a rainbow: red, yellow, or violet? Respond with the color and no other text.", we would expect the response to be "yellow". For these cases, it's appropriate to use reference based metrics.

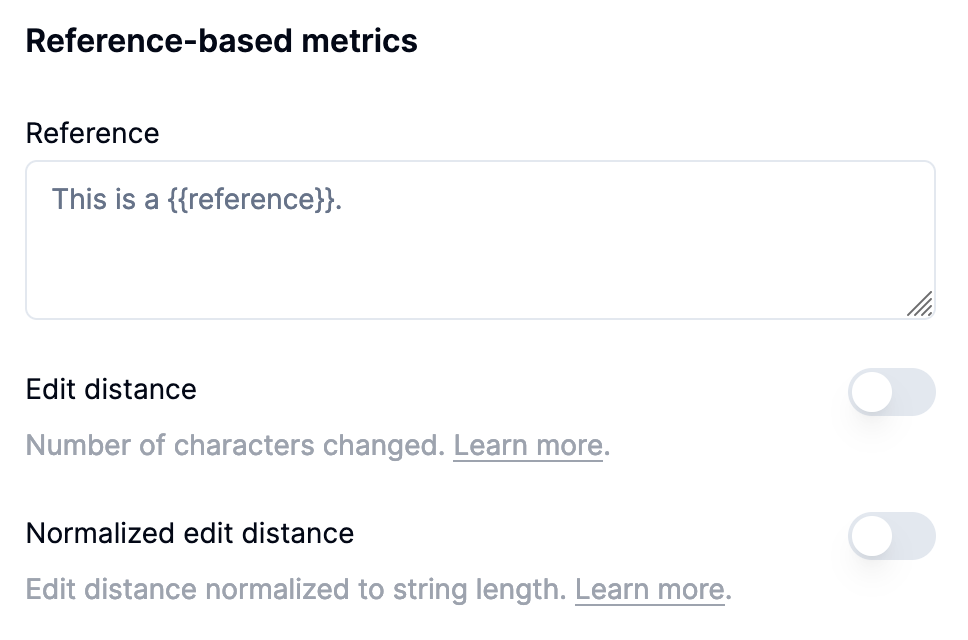

To use reference-based metrics in Airtrain, you need to populate the "Reference" field with a template for what the reference should look like. You can use the same templating rules as for prompt or response templates. The available fields to populate the template will be pulled from the jsonl or csv file you uploaded. For example, if your source data has a field called "answer", and the model is supposed to output the answer by saying "[ANSWER]: " followed by the answer, your reference template might look like this:

[ANSWER]: {{answer}}

Once you have provided a reference, you can toggle various metrics to compute with it.

Metrics

Edit distance

This is the Levenshtein distance between the reference text and the actual model's response. Roughly, this corresponds to the number of characters you would need to insert, remove, or replace to convert from the model's response to the reference.

Normalized edit distance

This is the Levenshtein edit distance divided by the max of the number of characters in the response or reference. This can be useful if you care more about the relative frequency of the number of errors in different texts (i.e. errors per character) rather than the absolute number of errors.

Updated 6 months ago